Futuretech

Real Estate

MedTech

Security & Insurance

The Playbook of Accessible Design for Business Owners

Generative UX is no longer a conference talking point — it’s in the trenches.

From corporate design labs to university workshops, teams are experimenting with ways to let generative AI co-design interfaces, summarize research, and even shape prototypes. Sometimes it works brilliantly. Sometimes it breaks in unexpected, illuminating ways.

This isn’t a highlight reel — it’s a field report from the messy middle: what we’ve actually tried, what’s fallen apart, and what we’re beginning to understand about putting generative UX into the real world.

When the UX team at LexisNexis’ Tolley division began experimenting with their internal large language model, the goal was simple: eliminate research bottlenecks.

Typically, six to eight hour-long interviews could take weeks to process — with designers manually tagging transcripts, clustering insights, and surfacing patterns.

By feeding transcripts into their enterprise LLM and building a structured “prompt deck,” they found the model could rapidly summarize key themes, extract quotes, and flag pain points — compressing weeks of work into hours.

The catch?

Some summaries missed nuance, and occasionally the AI “hallucinated” participant quotes. It was fast but fallible. Designers learned the essential rule: AI is a brilliant assistant, not a trustworthy analyst. It can accelerate synthesis but still needs human interpretation to make the insights real.

A similar insight surfaced with ChatGPT Enterprise’s Deep Research capability in 2025. This version could process large datasets — transcripts, screenshots, PDFs — and ground its analysis in specific documents.

Suddenly, AI could reference real context rather than generalize from training data. It felt like a leap forward.

But the boundaries showed quickly. The model sometimes ignored the very context it was supposed to anchor on or overemphasized minor details. What ultimately saved the process wasn’t the model — it was the rigor of researchers who reframed, verified, and filtered outputs into insights stakeholders could trust.

Generative UX was powerful — but only when paired with discipline.

In academia, a team designing a data analytics interface for highway traffic engineers pioneered a new workflow called “vibe coding.”

Instead of handcrafting prototypes, they prompted generative models to create dozens of UI variations on command. Within one feedback session, engineers could react to multiple interface options, pointing out what confused or resonated.

The approach collapsed design cycles from weeks to hours — a massive productivity leap.

Yet the generated interfaces often misunderstood real workflows. Engineers still needed guidance to navigate them, and much of the generated code required cleanup before it was usable.

The takeaway: speed without alignment can create elegant detours to nowhere.

Generative UX is expanding beyond pixels.

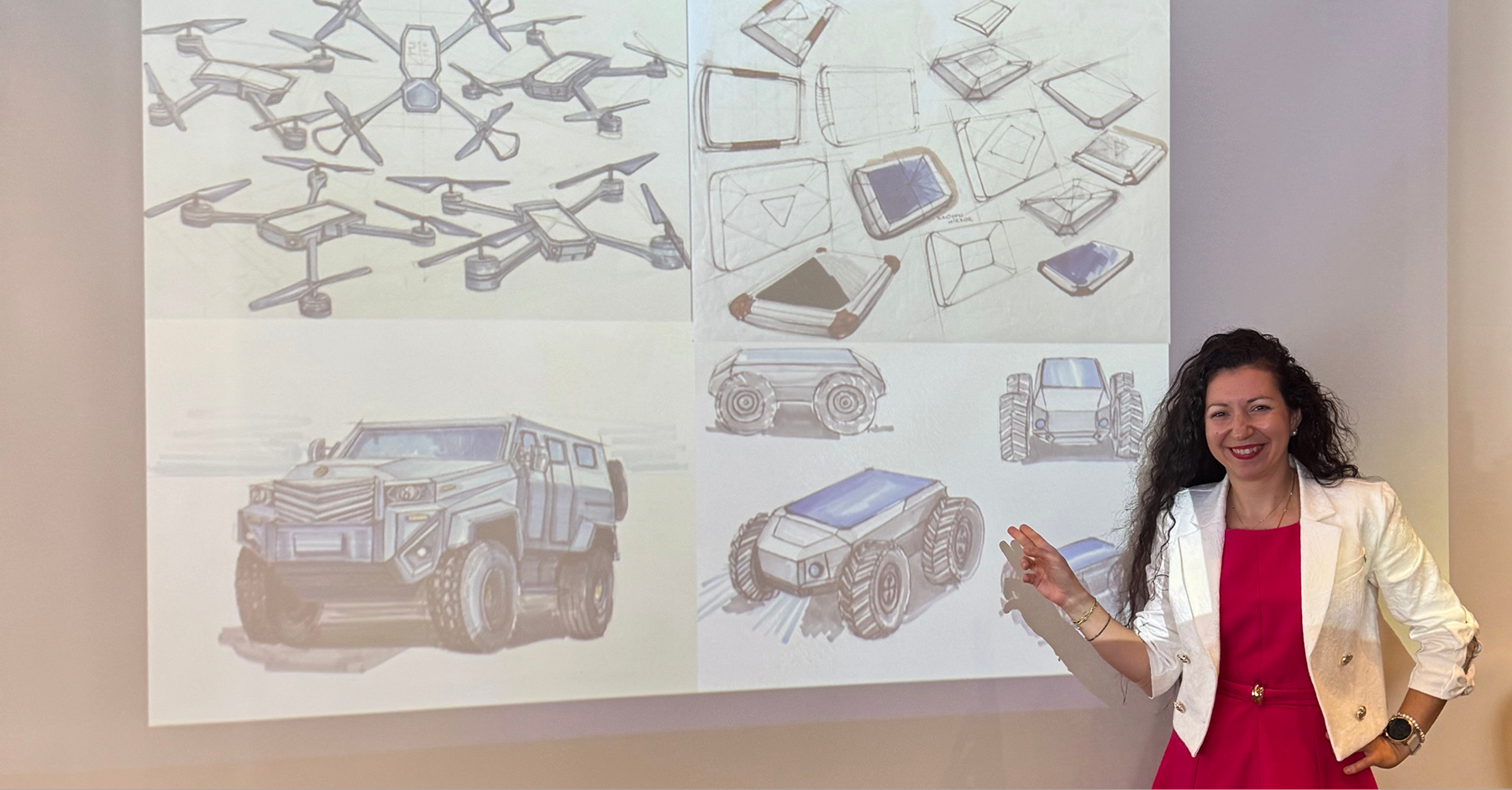

In product design, companies have begun using image-generation tools to accelerate early concept exploration. McKinsey’s research reports that teams can produce dozens of packaging or hardware mockups in a fraction of the time — cutting concept cycles by up to 70%.

The visual payoff was undeniable. But many of these AI-driven designs looked beautiful yet ignored the constraints of manufacturing, materials, or cost.

Once again, human expertise became the bridge — grounding imagination in feasibility. AI sparked the ideas, but engineers had to bring them down to earth.

Across every experiment, the same lesson repeats: Generative UX shines in exploration, not execution.

It can summarize interviews, but not interpret emotion.

It can generate ten prototypes, but not judge which respects a user’s workflow.

It can visualize futuristic designs, but not calculate tensile strength or production cost.

When human expertise leaves the loop, the process unravels.

Practitioners now emphasize human-in-the-loop workflows, precise prompt engineering, and tight iteration cycles with real users. Without those guardrails, generative UX quickly turns insight into noise.

Generative UX is both exhilarating and humbling. It lets teams explore ten times more ideas — and get lost ten times faster. It can compress weeks of research into days, but every shortcut still demands validation.

For UX leaders and product managers, the guidance is clear:

Innovation doesn’t come from the AI’s output — it comes from how we interpret and refine what it gives us.

Generative UX isn’t replacing designers; it’s reshaping what design means.

The future belongs to teams that can balance machine speed with human depth — turning imperfect drafts into the next leap forward.

Generative UX won’t design for you. But it will make you rethink what design can be. Explore Our UX Innovation Insights →

Our friendly team would love to hear from you.

By submitting this form you agree with our Privacy Policy